Split-Screen UX

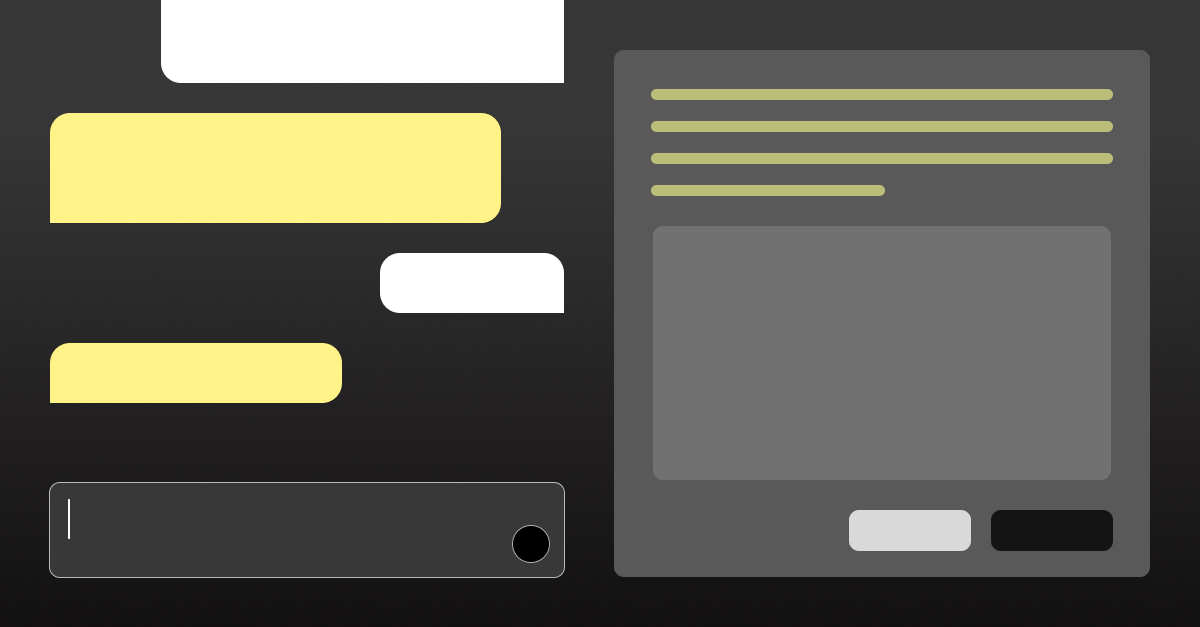

If you’ve used any modern AI tool—especially those in coding, writing, or image generation—you’ve likely noticed a familiar interface pattern: a chat panel on the left and a generative panel on the right. One side holds your conversation with the AI; the other, the result of that conversation. But as AI interfaces mature and become more mainstream, an interesting design challenge emerges: which panel do users actually want to interact with?

As a UX designer, this question is top of mind. Let’s take a simple example—say you ask the AI to generate an image of a candle. The image shows up in the right panel. Now, you want to add a flame to it. Do you:

- Type in the chat: “Can you add a flame to the candle?”

- Click on the image, use a visual editing tool to circle the top of the candle, and then tap “Edit”?

Both actions achieve the same goal. But they speak to very different interaction preferences—and cognitive modes.

A Familiar Pattern: Maps vs. Lists

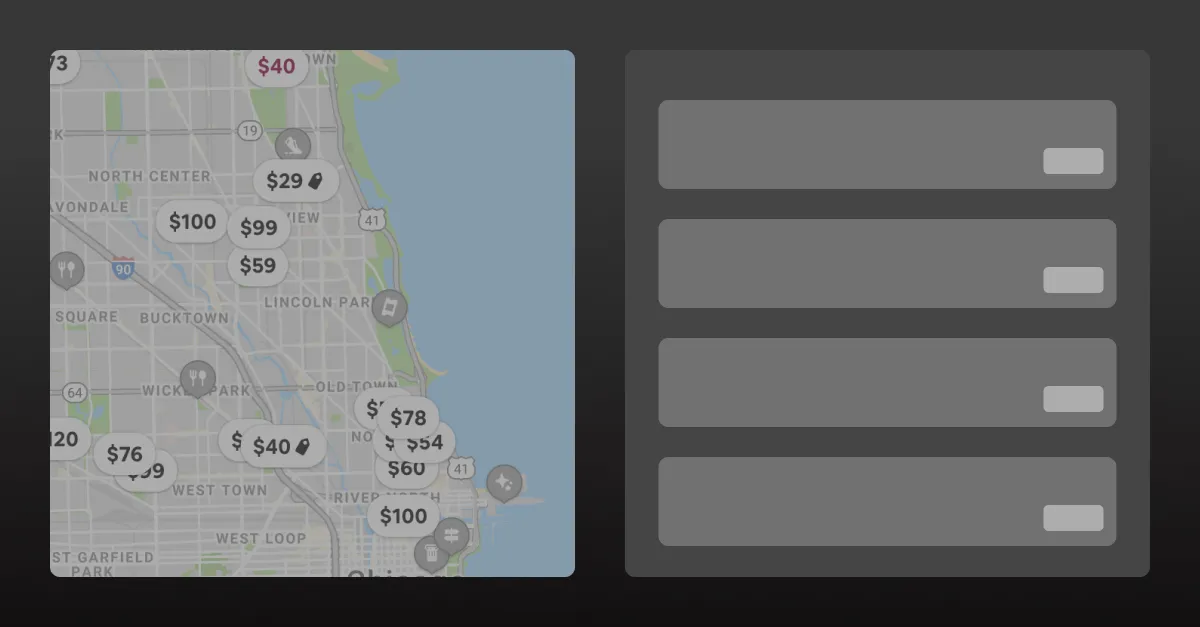

This duality isn’t new. It reminds me of an old UX problem we’ve tackled for years: maps vs. lists—especially common on hotel websites. You land on a page that shows search results in two formats: on a map and in a list. Some users immediately gravitate toward the map, zooming and panning to see where the hotels or properties are. Others skip the map entirely and dive into the list, sorting by price, rating, or photos.

In research I’ve done in the past, it was often a near 50/50 split. One group thinks spatially and prefers navigating through maps. The other thinks visually or economically, focusing more on how the hotels or properties look or how much they cost. Each group filters and interacts with the exact same content in fundamentally different ways.

Applying That Lens to AI Interfaces

I believe we’re facing a similar divergence in AI UX right now.

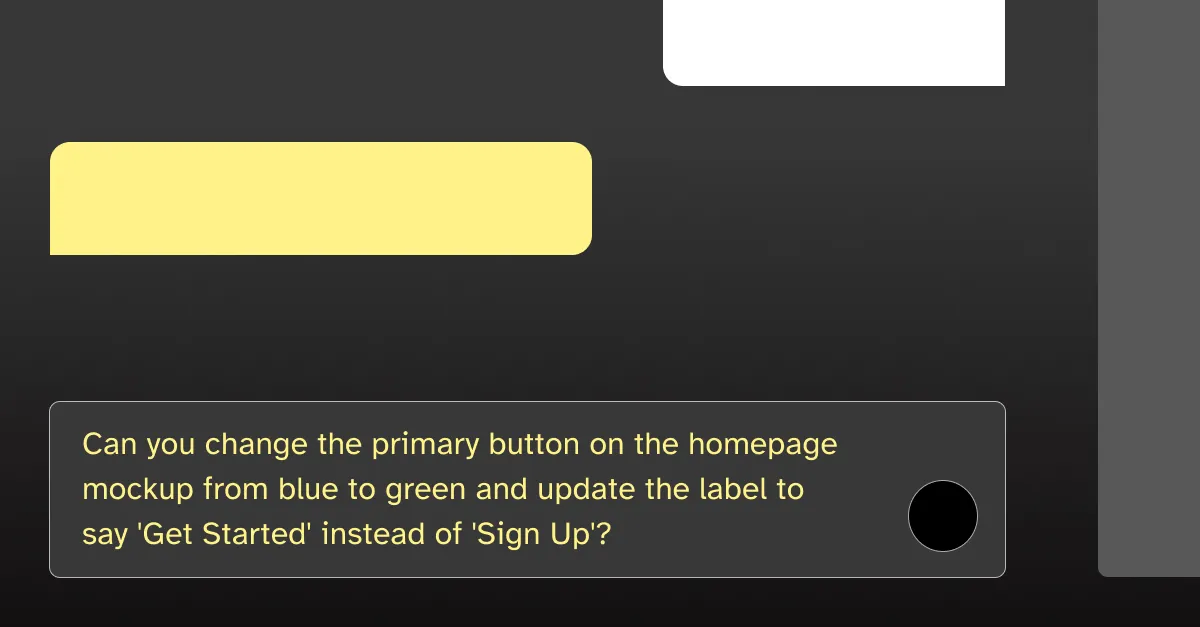

Chat-first

Users prefer language. They enjoy the feeling of having a conversation with the AI, even when issuing specific commands.

Visual-first

Users prefer direct manipulation. They want to touch, drag, circle, and edit the thing they’re working on—especially in visual domains.

It’s not about one mode being better than the other. The real challenge lies in how the interface adapts to each user’s habits and mental model. As designers, we’re entering a phase where we need to think beyond offering both options—we need to consider how the interface can intelligently respond to how a person prefers to interact. Does the user lean into chat-first commands, or do they prefer visual manipulation?

Understanding and adapting to that behavior might be the key to building truly intuitive AI experiences.

Looking Ahead

These are still early days for AI-powered interfaces. Much of the current usage comes from early adopters—tech-forward, comfortable with ambiguity. But as AI tools begin to replace traditional web flows and reach the mainstream, we’ll need to move beyond just supporting diverse interaction styles.

The chat panel and the generative panel aren’t just UI choices—they represent two fundamentally different ways of thinking. The next challenge is designing interfaces that don’t just offer both