The UX in AI

Lately, my LinkedIn feed has been flooded with takes on AI in UX—some exciting, others unsettling. Companies like Duolingo, Shopify, and others are experimenting with scaling AI across the org. In some cases, they’re even paring back what we traditionally think of as the UX process.

What does that mean? Some call it the “UX lifecycle,” but at its core, it’s the shift from deep user understanding toward visual speed—AI helping you get to something visual faster than ever.

And that’s both incredible and incomplete.

The Rise of Visual Speed

One undeniable benefit of AI in UX: we can get to something visual really fast. What used to take a sprint or two—mockups, wireframes, click-through prototypes—can now happen in minutes with the right prompt.

But the downside? We’re seeing less of the thinking behind the design. Less logic, fewer flows, almost no interaction documentation. Designers skip ahead to “what it looks like” instead of fully mapping “how it works” or “why it matters.”

The Human Layer Still Matters

Plenty of people have called this out, especially on LinkedIn. Empathy, nuance, and contextual understanding—these are still out of reach for large language models. And that’s okay. AI isn’t supposed to replace humans; it should amplify what we do best.

While tools and technologies evolve, user goals and needs remain constant. The essence of UX has always been about empathy—understanding the user’s journey and crafting experiences that align with their needs. That doesn’t change in the AI era. If anything, it becomes even more critical as we balance the capabilities of AI with the timeless principles of human-centered design.

What’s Old Is New Again

Here’s where it gets interesting for me personally. I started in this field as an Information Architect. That role faded a bit over the last decade, swallowed up by “UX designer” as a catchall. But now, those old IA muscles are coming back.

Why? Because logic matters again.

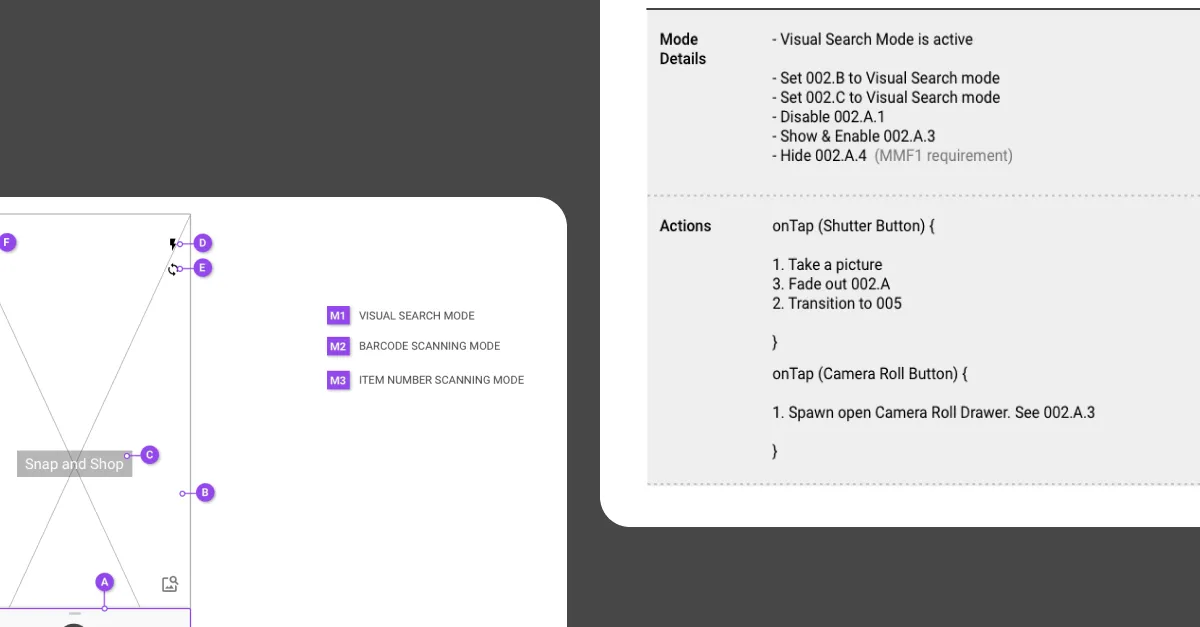

Understanding use cases, edge cases, designing user flows, capturing interaction logic—these are now critical if you want to use AI effectively. You can’t just say “create a login form.” You need to say:

- What happens if someone’s account is blocked?

- What if their password was reset by an admin?

- What if they’re logging in from a shared device?

These are inputs. These are prompts. And they’re incredibly powerful when fed into an LLM. In many ways, documenting a user flow is now prompt engineering for product design.

Advice for Designers Entering the AI Era

If you’re a designer stepping into the AI world, don’t skip over the boring stuff. Get good at:

- Writing clear use cases

- Building user flow diagrams

- Documenting edge cases and logic

- Mapping business rules to interface behavior

This documentation doesn’t just help engineers anymore—it helps AI help you.

It’s not about one mode being better than the other. The real challenge lies in how the interface adapts to each user’s habits and mental model. As designers, we’re entering a phase where we need to think beyond offering both options—we need to consider how the interface can intelligently respond to how a person prefers to interact. Does the user lean into chat-first commands, or do they prefer visual manipulation?

Understanding and adapting to that behavior might be the key to building truly intuitive AI experiences.

Going Deeper With AI

And here’s the thing: AI doesn’t just speed up the visual. It can go deeper. If you used to be able to research a user to a depth of four feet, AI lets you go 50. That could mean surfacing patterns from 20 years of usability data or generating a podcast to summarize qualitative insights.

It’s not just about speed—it’s about depth.

If you want to dive into that idea more, I’m writing a separate piece on how AI can resurface and synthesize decades of buried research. Stay tuned.